Key Takeaways from Paul Snow’s Interview on the Credibly Neutral Podcast

- Background and Expertise: Paul Snow, CEO of IDD Labs and chief architect of Accumulate Network, brings a rich history in computer science, with a master’s degree in threaded interpreters and prior roles at Microsoft, IBM, and developing rules engines for Texas government policy automation, later adapted by other states.

- Blockchain Journey: Transitioned into blockchain from a robust software career, drawn to Bitcoin’s auditable, permissionless value system in 2013. Contributed to Ethereum’s early EVM design, leveraging expertise in threaded interpreters, and founded Factom in 2014 to create a Bitcoin data layer.

- Accumulate Network Overview: Evolved from Factom, Accumulate addresses data integrity and scalability with a focus on digital identity, data curation, and cryptographic proofs. It supports complex use cases like supply chain transparency, medical wallets, and border security by enabling selective data validation.

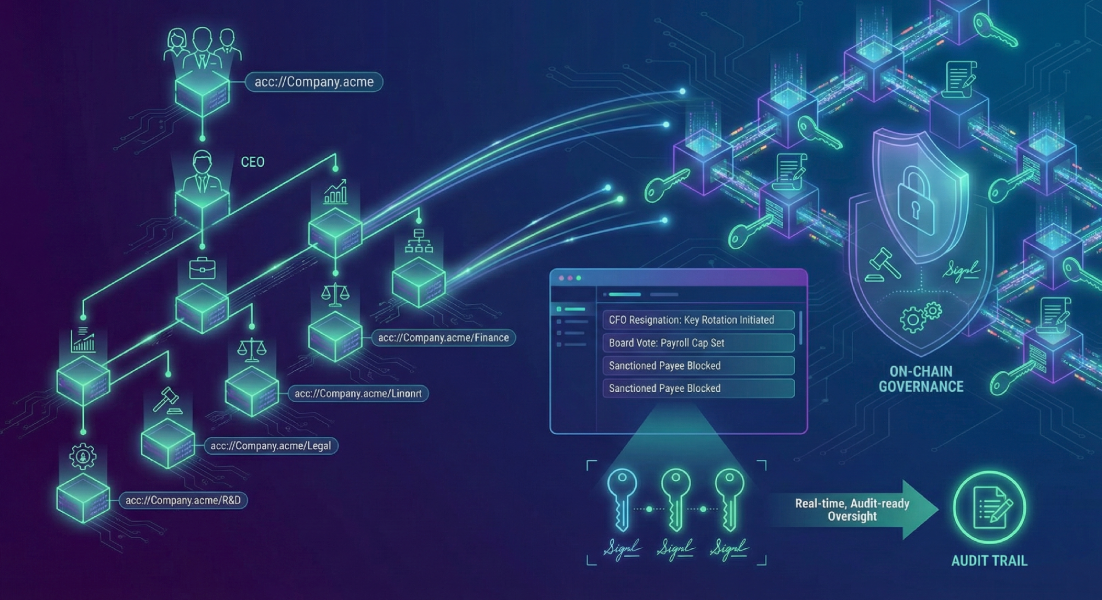

- Unique Features of Accumulate: Uses URL-based addressing for accounts and identities, operating as an Internet protocol. Its key book system supports flexible, multi-signature authorization, enhancing security and developer experience while minimizing blockchain audit requirements.

- Data Integrity and AI Synergy: Accumulate ensures data fidelity for AI applications, providing cryptographic proofs to distinguish real from AI-generated content, critical for maintaining trust in data-driven systems.

- Real-World Applications: Supports account abstraction for cross-chain token management and smart contract validation. Enables transparent governance and auditable supply chains, with past projects including medical wallets for the Gates Foundation and lumber tracking for U.S. Customs.

- Vision for Blockchain’s Future: Foresees a multi-chain, cross-chain ecosystem with Bitcoin as the foundational value layer due to its auditability and divisibility, surpassing gold. Other blockchains like Accumulate will handle data and niche applications.

- Future of Tech with AI and Blockchain: Envisions AI and blockchain revolutionizing software development, eliminating traditional programming languages and operating systems, with secure, automated systems deployed via AI and validated by blockchain. Git remains a pivotal tool for code management.

- Societal Impact: Emphasizes blockchain’s role in fostering honest, transparent systems to combat misinformation and scams, aligning with free speech principles. Sees AI and blockchain as intertwined for auditing and accountability in an increasingly complex digital world.

The Interview

Introduction and Paul’s Background

Michael Lesane, 00:02

You're listening to the Credibly Neutral Podcast, a production of Cryptobit Magazine hosted by Michael Lesane. Today I'm speaking with Paul Snow, the CEO of IDD Labs and the chief architect of Accumulate Network, formerly Factom Protocol, which IDD supports as a core developer. His ongoing work in the Accumulate ecosystem supports a myriad of on chain use cases, including complex authority structures and data availability, as well as cross chain tokenization and asset management. It was utility of this nature which attracted the attention of Inveniam, a data management platform for private equity markets, which acquired Factom incorporated four years ago and under which IDV Labs was initially formed. Prior to launching Factom in 2014, Paul was actively involved in the Bitcoin ecosystem as a developer, as an avid conference organizer, and as an advocate briefing Congress on the value of blockchain technology on at least one occasion.

Explore more blockchain insights from industry leaders.

Michael, 01:00

He was also one of the founding developers of Ethereum. This all came following a lengthy and accomplished career in the software industry, including contributions at Microsoft and IBM, as well as developing decision table rules technology used by the Texas government for policy automation. Ports of this technology have also been used by the state governments of Colorado, California and Michigan, and it is being repurposed for on chain compliance applications by C3 rules, which Paul advises. This all provides for a truly foundational and evergreen individual in both the blockchain industry as well as the broader technology industry, who I look forward to speaking to in greater depth in this episode. So, Paul, we go back a little while now, but do introduce yourself to listeners, particularly if there's anything I've overlooked or got wrong in the introduction.

Paul Snow, 01:49

Well, thank you Michael for allowing me this opportunity to talk to you and your listeners. My background is in computer science. I did a master's degree in developing threaded interpreters and creating novel development environments basically built on fourth, which fed into working with PostScript and printing back early in my career and later in building interpreters for running rules engines, in particular there building rules engines to support eligibility determination for the State of Texas. So basically, some of the early beginnings of AI expert systems and whatnot. Not trying to create reasoning models, but trying to create mechanisms for organizing data and information and making that data and information accessible in applications.

Paul, 03:10

That sort of interpreter is at the core of Bitcoin and at the core of Ethereum and that's kind of what led me into doing some small contributions for Ethereum in the early days, particularly around the design of the EVM. I did develop a huge appreciation for data systems and some of the problems that we have in society around managing valid data. Valid data is our protection. It's basically the wall against the hordes of scammers and dishonest people in society. Having good quality, trustable data is how we protect ourselves and how we create honest systems. This is at the basis of free speech in the United States, because the first defense against lies and misinformation is more speech and more information.

Valid data is our protection. It's basically the wall against the hordes of scammers and dishonest people in society.

Paul, 04:28

All that feeds right into the Bitcoin mindset and the Bitcoin philosophy of an auditable record and an auditable set of data that can underlie a monetary system. And that underlies all of crypto, and it underlies Ethereum, and it underlies basically everything that we're doing. And so, I, when I discovered the crypto scene, I totally fit in. I totally got it and have, since maybe 2013, have just devoted everything, almost every awaking hour to promoting and building this ecosystem. So that's kind of who I am and where I came from.

Michael, 05:25

So it's like there was a seamless transition, well, not necessarily seamless, but a relatively smooth transition from your previous work to areas of working interest in the early blockchain space.

Paul, 05:39

Yeah, it was pretty seamless. I mean, it was as if I had prepared for the crypto space. All of the work I did before, all. Everything that I was thinking about, I'll be honest, I had a long career before crypto showed up. And I have fought with computer systems and fought with computer configurations and how you deploy software into data centers and onto. Well, weren't calling them data centers in the early days, but just how to put them onto servers and how to create these systems that run on top of the Internet. And one of the big problems that you have is just how do you manage and control that and create deployable systems that are dependable and reliable and secure. And so, when crypto showed up, it was just like. Like this was a solution I was always looking for. How do you.

Paul, 06:54

How do you create a foundational set of data, of images, of configurations that are beyond manipulation, you know, in real time, and then build on that to create solid, reliable systems? And yeah, crypto is really the solution to all that. And we haven't scratched the surface yet. There's. There is a lot to be done with secure data that we haven't even begun touch. And oh, by the way, there are huge forces in this world that don't want us to go there. Reliable data is honesty. And unfortunately, a lot of people equate honesty with liability. And as Long as we submit to that, to admit to the forces that are, that we will tolerate dishonesty because limiting liability is so important, well, that's wrong. We really need to get to a place where we embrace honesty and accept responsibility.

08:27, Michael

So I suppose we can begin by talking more about Accumulate network. What is accumulate and what are its capabilities?

08:36, Paul

So to talk about accumulate, let me start with Factom. So, Factom was a protocol I started in 2014. The idea was to create the simplest basic data layer that you could create for Bitcoin. It didn't have any sort of limitations in terms of how much data you could record or how many transactions Bitcoin could process, or how expensive it was to process on top of Bitcoin because there's a whole set of use cases that are based on data. Things like supply chain, where you need to know where a product came from, have the digital signatures and digital identities of the parties that produced, transported, managed, did, qa, did distribution of a product, so that by the time, let's say you open a medicine bottle and take two pills, there's an actual record of where those pills came from and who produced those core components.

10:00, Paul

And the confidence that none of that was compromised between, let's say, components that were produced in different countries, transported in different ways, combined by certain parties, and produced and transported and then finally sold to you. You should, you should have confidence of that entire chain. We don't have that. You open a bottle today and take some medicine. You're just hoping that everything was good. There's no transparency, auditability, or responsibility in the supply chain. I mean, there is, but it's spotty and it's. And it's almost impossible to track. And so, with that need in mind, I built Factom. And using the basic principle of do the least possible, Factom was a data layer for Bitcoin such that every record was secured by the entire security of Bitcoin itself. That was the goal.

Paul, 11:15

Now, Factom ran for as Factom all the way up until 2020 and did its job. And, and the. There were a number of contracts and projects that we built on top of Factom, including a medical wallet for the Gates foundation targeting South Africa, so that individuals with this medical wallet would be able to go into a clinic and even if they've never been there before, with control by the individual themselves, provide all the medical records to the clinic to have proper treatment. Now, this would save millions of lives, or certainly many lives, because a lot of medical treatment needs to be consistent and managed within the whole context of what has been done with the patient. And if you can't reliably bring those medical records to a doctor can't give you the top-quality treatment. No.

Paul, 12:36

By the way, we have that problem in the us People get some treatments from this doctor, some treatments from that doctor, they change jobs and they're in health insurance changes. And in the course of all this, their medical records are scattered all over the place. And it's very difficult to bring those medical records together and hand it to a new care provider. And so, this medical wallet work we did was pretty groundbreaking and pretty incredible. Now, like many grants from the GACE foundation or any other such entity, I know that work was done, it was proven, it was validated, but it didn't really go anywhere. We had the opportunity to push that as a commercial product, but we always run into the barrier that good, accountable records in the control of an individual.

Paul, 13:43

That idea is not necessarily in the best interest of all the providers around them for various reasons. Certainly, liability plays a role, but also a desire to control information and some misguided ideas by governments like HIPAA records, which somehow or other don't just protect your information, it protects your information from you. And, and that's a problem. But so, let's set that to the side. We also did some work with supply chains with the customs part of Homeland Security.

Paul, 14:31

And our particular work for several contracts was to track lumber produced in South America and other places and ensure that when you got to customs you had a clear bill of sale or a clear auditable trail of origin that showed that the lumber was properly permitted and harvested and properly handled and brought to the border of the United States, and it was now going to be imported. And that supply chain work would make those products come into the US Quickly and know that this wasn't lumber that was basically poached out of the Amazon. We also did work on the border. The I one of the ideas is that as far as border security is to monitor the border and detect entry by those that would cross the border illegally.

Paul, 15:41

And not necessarily creating a literal wall, but a virtual wall that's able to detect these incursions. But not just the incursions of people wanting to come into the border, but also incursions by drug cartels and others that would like to bring in contraband of various kinds, certainly fentanyl and weapons might even be going the other direction, but to stop this kind of traffic. Now, what is true is that the drug cartels pay more and have better IT departments than really the government does, and certainly border security. And we did some very interesting work to create cryptographic proofs of those devices to ensure they're not compromised. And that gets us to accumulate. The Factom always ran into resource restrictions. Our opportunities always outstripped our ability to execute.

Paul, 17:01

A lot of that had to do with not embracing the crypto game plan for raising resources and building projects and incorporating the whole crypto community into what we're doing. And so that ran into some issues. Furthermore, Factom itself being such a low-level data layer meant that digital identity was a layer on top of the data layer. And also, the mechanisms for correlating data across Factom was more complicated than it had to be. And what we did was use over six or seven years of experience on Factom to build a new protocol that incorporated better data encapsulation on the blockchain, which amounts to smaller, simpler proofs of data on the blockchain and digital identity to create more flexible, more expandable security of that data.

Paul, 18:22

So the ability to curate data where a data set is actually curated as it's built, as opposed to Factom, where curation was also something that was kind of a second layer. So curating data which amounts to what is valid data and what is not valid data. Doing that in the second layer and doing the digital identity in the second layer and doing the collaboration, the multisig type operations in the second layer, all of that made building applications on Factom harder than it had to be. So, when we reimagined Factom into Accumulate, we subsumed all the data that Factom had collected and all of the tokens and everything else that Factom represented and we put that into Accumulate and we built, rebuilt the cryptography underneath Factom and we implemented all of the sharding required to eliminate transaction limits.

Paul, 19:46

You know, how many transactions we could perform per second and we reduced how much data was required to have a cryptographic proof of a token account or a data account or any of the other information that's being organized on Accumulate. The result is a protocol that allows you to have full cryptographic proof of the data sets or the tokens that your wallet is concerned about, and allows you to ignore 99, maybe 99.999% of the rest of the data that might be managed in accumulate. It goes back to an observation that I have made in the past, which is any cryptographic layer that is going to handle the data problem has to support selfishness.

Any cryptographic layer that is going to handle the data problem has to support selfishness.

Paul, 20:57

And by selfishness, I mean, an application that has data in the blockchain needs to be able to manipulate and validate that data while ignoring all the data they don't care about. You have to be able to create cryptographic proofs of your data because that's your problem, and ignore other people's data because that's their problem. And Accumulate really does address this and really does solve this issue.

Michael, 21:39

So I guess in a way it's simultaneously polishing user experience, sorry, developer experience, and also allowing for more expressive capabilities. And it's basically mitigating the tragedy of the commons with respect to different users or developers building on the blockchain or platform.

Paul, 22:00

Yeah, exactly. I mean, the way Accumulate works is it imagines that we have these data sets, we have multiple parties who are interested in these data sets as they're being built and creates it in a platform. On a platform that allows that information then to be deployed into other uses and applications and do that in a developer friendly way. Yeah, you made a really good point when it comes to the developer and the developer's role here because without the standardization of the, of the cryptographic proofs and without the standardization of how curation is done, this rapidly becomes a massive headache for developers because there's an infinite way all of these things can be done. So how do we build something that is interchangeable and reusable if we don't standardize inside of the protocol some of these core ideas?

Paul, 23:20

So it is about making it easier for the developers and that makes it easier for the users.

Michael, 23:27

That makes sense. So, what integrations today best demonstrate real world applications of Accumulate network?

Paul, 23:36

Well, that's a great question because right now we've got people who are building account abstraction solutions that allow users to use Accumulate to manipulate smart contracts on other platforms and to move tokens about and that sort of thing. The Accumulate model allows you to build very complex applications. Even something that looks just like an, acts just like a centralized exchange. As long as you're operating against and validating against a data layer that has a cryptographic proof of correctness, and let's say take a centralized exchange as a application, this is not one of our current applications. So, I guess in some sense I'm skipping a little out of your question, but it's illustrative of what we're doing. A centralized exchange might create terabytes and terabytes of data that would have to be validated to trust the entire exchange.

Paul, 25:10

But if we apply the selfish principal asks is the balance That I have the balances I have in my account, are they being managed properly? And are the trades that I'm participating in are those trades being managed properly? And that's a vastly smaller subset of data. And that MA and that smaller subset of data has its own cryptographic proofs that my code can validate. And if my code is validating that I am being properly treated, and if I'm able to query, let's say somebody else's claim that they weren't properly treated, evaluate that and prove that they weren't, or counter that, prove that they were validly treated, and the person claiming that they were not treated fairly is actually full of crap or something.

Paul, 26:21

Okay, as long as you can do that, then it doesn't matter how complex the system is, because the system operating against validated data with validated audit trails of operations can itself be validated. And this allows someone to effectively run the centralized exchange as a smaller version of it that is validating a portion of that exchange, the sum of all the users doing this means that the sum of all users are validating everything a centralized exchange is doing, which now makes it decentralized because every operation is following a set of rules defined by software and those rules are validated against the data. And that's all a decentralized exchange is. So, getting back to what we're doing today is creating abstract account abstraction. And that's a core piece of what that hypothetical centralized exchange could be doing.

Paul, 27:36

And we're validating smart contracts and how some smart contracts are controlled. And that's part of this. And we are simulating governance where multiple parties who, each party of which could be a corporate entity with its own decision-making structure, all on account ACCUMULATE can result in to approvals of action that feed into a sort of corporate decision-making process and even operational process. So once fully automated with AI integrations, you have the possibility of managing a organization in some sort of completely honest, transparent way where any portion of it can be examined and evaluated by anybody in real time. And we're building those Legos, we're building those pieces, we're not there yet. We don't have the AI integration, we don't have a lot of automation of the verification of our account abstraction and so forth.

Paul, 29:03

But I believe we are rapidly going to get there. And this is basically where we are with ACCUMULATE and where we're headed.

Michael, 29:17

So it seems like there's an overlap between the rules-based auditability facilitated by ACCUMULATE and the policy and regulatory auditability of your rules engines. What are the broader implications or applications of this auditability?

Paul, 29:33

Well, that is a very complex question. Let's see if I can address it a bit. One of the observations about regulatory compliance is regulatory compliances at least intended to be deterministic. In other words, one should be able to know that one is regulatorily compliant or not. Now, there is a bit of a double standard that the government plays. For example, if you're going to be eligible for any sort of assistance program or program like Social Security or whatever, some sort of benefits program, there are strict rules that are defined and those rules are applied in a certain order. And the net result is you are eligible for X. Right. And that's all mathematically constructed eligibility determination for assistance programs.

Paul, 30:50

Given that there are many overlapping assistance programs and even different perspectives by which one might be eligible for assistance programs, I would say that the typical state managing, let's say 50 to 80 assistance programs that are available statewide versus federally and so forth, there could be as many as 30,000 rules that you have to apply to a household in order to determine those eligibility options. And they are options because there are times when you can choose this option or that option in these assistance programs. So, it's a very complex thing, not an AI thing, because an AI will generally take a set of rules and come up with a solution, but not necessarily apply those rules in a prescribed fixed order. And so, AI can reason about a lot of things that can't possibly be put into deterministic rules.

Paul, 32:07

For example, looking at a whole body of facts and choosing a investment strategy, an AI can do that, but a set of deterministic rules really can't because everything is too dynamic and the histories are not, are fuzzy. And some of the rules of thumb being applied are not something that I can deterministically define and define the application thereof. Now, what accumulate does is accumulate creates this body of data where this body of. And creates cryptographic proof of this body of data so that I absolutely know what the state of that data is at a particular time. That is not necessarily. I mean that's absolutely something that can be used for eligibility determination in terms of all the data collection and all the sources and all the verification of that data that has to be done.

Paul, 33:15

By the way, there are many interfaces into many computer systems that are exercised in the course of determining eligibility for state services by a rules engine. So, the rules engine gets the answers on an application. It validates those applications against government interfaces when it's through doing this whole process of creating a data set that represents a household. Then it applies these 30,000 rules and spits out some options for which the individual may or may not be eligible for. Because then there's other rules that will execute. I'm not saying that's, that's a very complicated process, but it's not a process for AI because AI is not good at just running through a deterministic set of rules in exactly a prescribed order as exactly specified by regulations. The AI would better at just kind of the human level understanding of what a person is eligible for.

Paul, 34:32

And that would be mostly correct, but it wouldn't be acceptable to government. Government wants those deterministic rules because they have to get the same answer for the same inputs every time, as per the regulated body. Now, what AI can do is guide users into informing them what they should apply for or not apply for at a general level. Because there are certain things or indicators that give you an absolute certainty that applying for some things will never be fruitful. For example, if you're infertile, there's no point in you looking for benefits that would benefit parents because you're not going to be a parent, you're infertile. On the other hand, there are things that will benefit adoption.

Paul, 35:37

So if building a family is your concern and those are the assistance programs you're concerned about, then an AI can certainly direct you towards adoption as opposed to fertility. Just off the top of my head, they're much better examples of things like certain disabilities. You either have them or you don't. And there's no point in digging into disability benefits if you're, if you don't have those disabilities or if you have disabilities, then maybe there's some other things that don't necessarily apply to you. AI is just better as a guide, even if you have to run through rules engine. Now what is a smart contract? That's an interesting question. Is it, is it kind of sort of like AI, or is it more like a rule’s engine? And the answer is, generally speaking, smart contracts act like rules engines. They're, they're deterministic.

Paul, 36:35

There's, you don't have on a blockchain the kind of computational bandwidth it takes to do AI, but you can integrate AIs into blockchains. The, the complexity of the AI can sit outside the blockchain. Now then, what does the AI do? What does accumulate do for an AI? That is where the observations of an AI can be recorded and things like images and documents and Whatnot can be recorded in time so that you can know the difference between AI generated content and actual data and content, even if it has an AI orient origin. So, for instance, a camera taking an image can create a hash of the image and sign that hash and place it on a blockchain.

Paul, 37:49

And now you have proof of a photo that is not AI generated, no matter how good AIs get in the future, because they can't go back in time and stick a hash in a blockchain. And so having the kinds of cryptographic proofs deterministically constructed in a protocol like Accumulate becomes a, a barrier to a AI driven world where reality becomes impossible to distinguish from AI construction of the past. I believe that blockchain and AI are destined to constantly evolve together in order to manage a historical proof in the face of a technology that can construct whatever darn proof it wants to.

Paul, 39:03

And, and this is, but then the crypto side, the, all of the data that we construct in and accumulate cannot really properly be vetted without something as powerful as an AI to run through all the cryptographic proofs to truly know what reality is. So, they both have to work together, they're both intertwined. And, and that's the kind of the future and the problem that Accumulate is aiming to solve.

Michael, 39:46

So in a way, Accumulate is basically providing a platform for data integrity, data fidelity, but also data which AI agents can then read, verify, process, and really have a degree of confidence in the origin and validity of.

Paul, 40:07

Yes, exactly. I mean, here's. We can put it a different way. The blockchain creates all kinds of opportunities to audit and verify and validate the data and the transactions that it holds and preserve those proofs forever, at least as long as the blockchain exists on disks everywhere. But that's of limited value without an auditor. And the only technology that's likely to keep up with the blockchains as they proliferate in the human interactions. The only way we can keep up with those proofs and validate those proofs is to have something like an AI as the auditor. We need the ability to audit. We need an auditor and we need, with an AI needs some way to be able to audit its own activities and probably only AI will be able to manage, you know, dig through all those audit trails to hold AI accountable.

Paul, 41:31

So one of the things that I've heard adapted, there's a saying that the cure to free speech is more free speech. One of the observations is the only protection or defense against AI is more AI and but fundamental to that is a cryptographic proof of the AI that the AI can process to evaluate AI itself. So yeah, you know, it all works together. And by the way, I mean, I'm going to point out all this is possible on Bitcoin. The only problem is an audit of Bitcoin requires auditing all of Bitcoin and it works on Ethereum. The only problem is auditing Ethereum is, requires auditing all of Ethereum. And so, you need an architecture that allows you to audit bits and pieces that matter. And so far, to my knowledge, Accumulate is really about the only architecture that does that.

The only protection or defense against AI is more AI and but fundamental to that is a cryptographic proof of the AI that the AI can process to evaluate AI itself.

Paul, 42:55

Fundamentally we designed to be able to audit bits and pieces of the blockchain and nothing can suck us into requiring a full blockchain audit. The architecture is effectively tree like and you never have to audit every limb to audit a limb of the tree.

Michael, 43:33

Is there anything else you would say sets Accumulate apart from other blockchains with respect to addressing needs of enterprises, developers, users, so on?

Paul, 43:46

Yeah, I mean there's, there is a pretty critical aspect of ACCUMULATE that we haven't discussed and that is every artifact in ACCUMULATE is addressed by a URL. And so, we're very URL based. In fact, we're designed to be an Internet protocol, just like HTTPs or FTP or any number of other Internet protocols that we use. That means that there's analog for domains which we think of as accumulate digital identities. ADIs and the token accounts are URLs. Now I don't mean that the URL maps to an address like you can do in Ethereum or many other protocols. Now I mean that the token account literally is a URL, and the protocol routes URLs to the proper part of the network for execution and operations against any particular account or ADI or any other structure.

Paul, 45:07

And accumulate, all those operations can operate in parallel because all operations are done within the context of a URL. Now where is the security then? Well, ACCUMULATE uses key books and key books hold the keys, and they define how signatures are created. And a signature isn't just a simple key signature, but rather a potential combination of signatures that can come from keys, but can also come from other key books. And so, a signature can be combined in a key book to produce a signature from that key book that can authorize a transaction. And so, accounts like token Accounts and data Accounts, they have authorities tied to a key book or an ADI's key book. And though a valid signature from one of those sources, the authority has to come into the account in order to authorize a transaction, let's say a token transaction, because that's pretty simple.

Paul, 46:30

That means if I create a token account and there is a key book that is the authority to that token account may require a single sig, or it may require a multisig, but the token account doesn't care. It just needs a signature that's produced from that key book. If I go and look at the key book now, it's a structure on the blockchain, which means that if it only holds one signature, I can decide that my good friend Michael and I should both be an authority on a particular key book. And I can add, let's say it's my key book to begin with, I can add Michael's key into that key book. And now we're both required to sign a token transaction, or either of us can sign that token transaction.

Paul, 47:36

But that decision, both of us, either of us or just me, is done within the key book and is opaque to the token transaction who just gets a signature. Another thing that Accumulate does that other blockchains really don't, is it accumulates these signatures on chain and manages them as pending transactions until all the signatures are collected and times those out and throws them away after a time period. And so, a lot of this infrastructure and rules and possibilities for managing authorization of transactions is built into accumulate and is flexible enough to do about anything anyone ever wants. Wants to do. And that really sets us apart because it means there's this huge opportunity to model all kinds of security approaches that other blockchains just don't do.

Paul, 48:58

I mean, you could do a lot of this with smart contracts, but the smart contracts have a auditability problem that will make it difficult to automate decisions on the blockchain, because again, through cryptographic proofs on Ethereum would require a full Ethereum node. That's not something that fits on your smartphone. The kind of cryptographic proofs we build easily fit on your smartphone and will forever. So that's a huge difference that we with accumulate that we didn't discuss.

Michael, 49:45

So what originally drew you to Bitcoin and how have you been involved with the ecosystem?

Paul, 49:52

Sure. Well, the thing that attracted me to Bitcoin is this whole idea of a distributed autonomous network of value that was permissionless and outside the control of any particular party that had the game theory to create the value without force, you know, without, you know, some sort of government just saying, you know, respect this dollar, you know, and without a commodity, you know, like some gold or Steel or oil or, you know, pick whatever you think you might be able to construct on a. A currency out of. None of that exists. It's. It's just a, you know, solution to the Byzantine general's problem and game theory, and you're done. And it only uses a bit of, not really, honestly not that hard-to-understand bit of code and some very basic cryptography. And it does something that the dollar can't do.

Paul, 51:03

North Korea counterfeits $100 bills every day. And while North Korea does mine bitcoin, it can't counterfeit one at all. And, you know, the dollar bill has treaties and the world's largest military force behind it doesn't stop North Korea from counterfeiting. Bitcoin just has a few programmers and they did stop North Korea from counterfeiting. So, yeah, just a fascinating technology. And I guess you also ask how I got. Got involved in what I did getting involved in bitcoin. I just became a big advocate and just started meetup groups and meetup groups I still run and. Yeah.

Michael, 51:58

So how did your early involvement with Bitcoin lead to your contributions in Ethereum and what were the early days of the project like?

Paul, 52:06

Oh, well, I got involved in colored coins at the very beginning. So, I promoted a different way of doing tokenization on top of Bitcoin than some of the other mechanisms for color coin. Color coins. Colored coins are a way of. Of identifying a particular Bitcoin transaction as a Bitcoin that not just represents its Bitcoin value, but also represents some other value. Like, for instance, it represents your ownership of a. Of a piece of paper or so much gold or whatever piece of paper would be good for copyright if the piece of paper had a hit song on it, that sort of thing. Vitalik was very interested in colored coins and in fact did a summary of color coins that included my own algorithm for managing color coins.

Paul, 53:17

And it's that color coin work that got Vitalik firstly interested in something called Master Coin that became OmniCoin, a platform that then launched Tether on Bitcoin. It got Vitalik very interested in that, you know, how you do more on the blockchain. But he thought OmniCoin and, well, I guess it was Master Coin at the time thought it was too limited, thought Counterparty, which derived from Master Coin, thought they were too limited. And he started building this platform for Ethereum. And we became acquainted at conferences, particularly the Texas Bitcoin conference that I ran in 2014. And yeah, had. We had a lot of conversations and then we, I Got to evaluate some of the early ideas for the EVM and was able to contribute and dissuade them from some things. I don't value my contribution that highly.

Paul, 54:30

I mean, I believe they would have come around to what I, I told them anyway, but I probably hurried it along. The answer is the engine. The EVM needs to be a threaded interpreter. This is a technology that was used all the way back to Visual Basic. But in order to build a small, tight, fast, easy to use engine to run your smart contracts, threaded interpreter is basically what you have to use. And so, I made that small contribution to Ethereum. Threaded interpreter, yeah, threaded interpreters, they, it's basically the origin goes all the way back to fourth in the 60s. So, it, I did at least a couple decades of work in threaded interpreters back in the 80s and 90s. And so when we’re talking with Ethereum I had a whole lot of experience in how to construct these things.

Paul, 55:44

I guess I could say another thing about threaded interpreters. Threaded interpreters built on 4th have been controlling telescopes and satellites from the 60s, 70s, 80s, the Voyager satellites. If anyone has ever wondered, how do they keep those things running when they said they had this antenna program problem and they were able to reprogram it, how do they do that? They did it with a threaded interpreter, a fourth program running in the satellite. The entire code base compiler development environment could fit in, really, can fit in really tiny computers. And oh, by the way, that's the problem Ethereum has. They need to create very small, tiny smart contracts that fit into a blockchain without just exploding it all to hell.

Paul, 56:41

And so no matter how fluffy one might think Ethereum is, it would be a lot worse if they tried to do something more sophisticated than a threaded interpreter in the blockchain. And by the way, Bitcoin also its interpreter is a threaded interpreter. I'm not aware of anyone that does smart contracts on any other kind of interpreter other than these threaded interpreters.

Michael, 57:12

I guess I'm trying to clarify like what a threaded interpreter is, because when I think of threaded interpreter, I'm thinking of let's say the scripts being interpreted on multiple threads. But I'm guessing I'm incorrect there because Ethereum is definitely like a single thread in that regard.

Paul, 57:29

Yeah, yeah, yeah. The confusion is the dual, you know, the ambiguity of English. In the case of a credit interpreter in compiler theory, you're talking about a stack-based stack engine, a stack-based engine, so you have a set of operators that manipulate the stacks, so you take all your parameters on the stack and you maybe do a jump to another portion of your code and it will pick up those parameters on the stack and execute them. So, everything is in postfix notation, which, by the way, this is German. You, in German you say the boy, the ball throw kind of structure. You, you have your parameters and then you follow it with the operator. And. And so it's this stack-based engine or interpreters that are also called threaded interpreters.

Paul, 58:55

Let me double check because I want to make sure that I am not lying to you in some way. The net result of using a stack to pass parameters is that your code looks like a sequence of subroutine calls, and you just put them in a thread and executing the program is to just execute each subroutine call in order. But there are no instructions for collecting parameters and pushing parameters around. The parameters are actually on the stack. And so there are a lot of flavors of threaded interpreters. You have direct threading, where the thread is a list of addresses, memory addresses that point to machine code. And so, what you end up doing is your inner loop of your interpreter is fetch, execute, fetch, loop, fetch, execute, loop.

Paul, 01:00:14

And what happens is when you do a subroutine call, it pushes the interpreter to point somewhere else in memory. And the interpreter keeps going, fetch, execute, fetch, execute, fetch, execute. When it finally does a fetch execute and that code, that is a return with that piece of code that does that, you execute is it pops the return address and sticks the program pointer to that address and then returns. And suddenly the threader, the inner loop of the interpreter, is now pointing back where it came from and it continues executing. So yeah, so you have direct threading, indirect threading, and token threading. And fourth does token threading.

Paul, 01:01:11

Because if you do token threading, you can do a byte interpreter where every subroutine call is a byte, and if the byte is a primitive operator, you interpret that byte as a plus or a minus or something like that. But if it is a special byte that says call something, then the next two bytes or four bytes is a address of a subroutine. And so, this is a byte encoded threaded interpreter. And the code can be very compact. That's what they, by the way, that's what they used in Voyager, because you don't have any room.

Michael, 01:01:55

Okay, I was thinking that the stacked based interpretation of bytecode almost brings to mind the bitcoin script.

Paul, 01:02:06

Yes, it is. That is a token threaded interpreter. And this is all 1960s compilation theory and compiler theory. And it predates almost everything. It doesn't quite predate Fortran, but it predates about everything else. And so, in fact, fourth is multi-threaded back then. And. And at that point in time, they were. I don't know what they call it. They may have called it multi-threaded, I don't know. But because your context switch is so cheap, you have a return stack for one thread and a data stack for one execution thread. And all you have to do is swap out the return stack, the data stack, and the IP pointer with another thread and you've done a complete context switch. And so, 4th was running multi threads long before Ms. DOS or a lot of other OSS.

Paul, 01:03:23

Those OSS had some multi-threading going on, but it was very primitive and it was very hard to run actual programs at the same time. But you could do that in fourth inside of a 4K processor where you only had 4K bytes for program and data, or an 8K processor.

Michael, 01:03:51

That makes sense.

Paul, 01:03:52

Oh, it's. You go down the rabbit hole of fourth and there's just all kinds of really crazy things. And I'm a. I loved programming in fourth, and we wrote a system back in the 80s called fifth, and I still have it on my GitHub. And you can run a DOS emulator and run fifth and see how crazy that code is. That code gets really crazy because it's completely written, only you can't read the code. I look back on the code I wrote and I'm like; I have no idea what I'm doing here.

Michael, 01:04:34

So to go back a bit, what was your early background like and what drew you to the tech industry and how would you describe your work prior to discovering Bitcoin?

Paul, 01:04:45

Oh, that's a lot of questions. Let me take the first one and then remind me of where to go after that. How did I get into programming? I can tell you how I got into programming. One. I'm old. Okay, so there's that. I went to college at Louisiana Tech because it was an engineering school that was close to where I grew up. And everybody I knew went there. And so, what the heck, why not, right? And first quarter, I had no idea what I was going to major in. Maybe leaning towards medicine, maybe not. But I was walking down the hall one day and I saw these guys with their decks of cards. Yeah. And that's how old I am. They were programming. We did programming at tech on a IBM360 mainframe.

Paul, 01:05:43

And we did our programming on cards and we ran them through a card reader. Anyway, these guys were talking about this language they were using now. I already knew how to write a loop from 1 to 10 in basic and I could run it on about any computer that ran basic. I just type it out and the idea was to see how fast it took. The computer could print 10 numbers. Believe it or not, in the early 80s, that was a good test for how fast the computer was. Maybe you'd go to 100 if, if it was too fast. But anyway, I saw these guys talking about languages and I thought, well, I'll take a Fortran class, you know, next round. And I took the Fortran class and so fell in love with programming that I never looked back. I, I became a developer.

Paul, 01:06:44

I ever. Everything anyone said about programming in those early days when I was, you know, 18, it was like it came with the Songs of Angels and was a complete revelation to me. And, and I just absorbed it like a sponge. And I can say I never made anything less than an A in any of my computer science courses. I cannot say that about English because I can't spell my way out of a wet paper bag. And. But as soon as I got past spelling tests, I did pretty well in English too. I mean, was never really concerned about grades, but just love technology from the moment that I started in engineering and loved engineering, so what? That's how I got into programming. And that's. And being innovative and thinking beyond out of the box was what I was doing from the very beginning.

Paul, 01:07:53

What was the next part of the question?

Michael, 01:07:55

So how would you describe your work prior to discovering Bitcoin?

Paul, 01:08:00

Oh, I always looked for innovative solutions. So, when I hit graduate school at Texas A and M, I went to A and M because all my professors back at Tech came from A and M and I admired them quite a bit. I, when I hit A and M, I ran into a fellow named Cliff Click. Cliff Click. After our collaborations became a key factor in java and creating JITs and improving Java's performance to match C and most benchmarks. But anyway, in the early days with Cliff and I, we did all this innovation in fourth and in building development environments that, you know, do crazy things that ides today can't do.

Paul, 01:09:04

Because one of the great things you can do with Fourth is use it to build your development environment and then use your development environment to build your programs, but also to build, extend the compiler to compile your programs the way you want to compile them. So, it got really into that. Well, that resulted in me writing the first clone of PostScript to ship in a commercial printer, our printer, the Printware 720 shipped in December of 1987 and that was before any other PostScript compiler running a PostScript clone, not an Adobe PostScript. That was before they ever shipped. And oh, by the way, we also implemented a PDF viewer before Adobe shipped a PDF viewer. Our mistake was not realizing that was a good product.

Paul, 01:10:11

It's too bad we didn't do that, but that all that effort ran for like seven years and really got into all of that, the interpreters and the way to use that to solve problems and that. Once I got onto the eligibility determination project in Tucson, I was one of the chief architects of the solution and they had already compiled all of the handbook into decision tables and that was going to be our spec for the real system. And now I was in the effort to just define the architecture for some future developer. So, I specked out, well, if the spec is decision tables, then just execute those decision tables. And again, I used the threaded interpreters from my past to build this rules engine to execute decision tables DT rules. And that became the foundation of eligibility determination for Texas, Michigan and New Mexico.

Paul, 01:11:36

These were the first rules engine-based eligibility systems to go into production in the US the big advantage being that the actual subject matter experts could edit these decision tables and understand them. And it took developers out of the process of automating eligibility and it made the eligibility system easy to update, maintain and extend. So those rules engines are still running today to my knowledge. I know they're running in Texas. And you know, none of the other commercial systems really worked as well because this view decision table view for the subject matter experts is just so powerful and relatively unique to what other rules engines do when they claim they do decision tables. So that's kind of, and that was what I was doing just prior to getting into the blockchain. So that's pretty much a kind of a thumbnail of my career.

Michael, 01:12:51

So broadly speaking, where do you see the industry heading and how do you believe ongoing trends will impact society? For lack of better words?

Paul, 01:13:01

Okay, well I, I believe that the broad trend is to use Bitcoin for value. I think Bitcoin nailed down the secure worldwide permissionless blockchain to define value at that use case. Allows for settlement, allows for settlement between platforms and companies and governments and it allows for security of the transactions and it allows for a definition of value and preservation of wealth. So that makes Bitcoin the ideal commodity underneath a value system. So, and, and it's a value system that is exceedingly more suited to back currency than gold for the single reason that gold cannot be audited easily. If you've got billions of dollars or trillions of dollars’ worth of gold, the only way you can audit that is to sample it.

Paul, 01:14:28

And if you sample it, then there is margin of error that you're accepting that some of that gold may not be really there. And, but with Bitcoin, it's a matter of looking at an explorer and looking at the digits and to any amount who can be absolutely certain of the value that is held or controlled by a company or a platform. That advantage is just massive and it's too great to accept gold as an alternative. The other big advantage of Bitcoin is how divisible it is. Gold as a basis for a worldwide currency would be just, it would blow up gold.

Paul, 01:15:26

Gold would be worth so much that all the applications of gold would go out the window and gold itself would become incredibly difficult to audit because just, you know, from, for many practical reasons and you certainly wouldn't be able to hand somebody $20 worth of gold. It'd be such a tiny little amount that how do you know if it's $20 or $40? You wouldn't be able to tell. Bitcoin is a number and a value on a blockchain. And so, no matter how valuable it gets, it won't be any problem to use gold as, you know, to represent 20 bucks. And so, for all these reasons gold has that value. That leaves the data problem, but the data problem is huge and massive. And of course that's the thing that accumulated same debt.

Paul, 01:16:24

The smart contracting, you know, high transaction platforms like Solana and whatnot, they have their uses. You, you can tokenize Bitcoin and do bitcoin transactions on Solana very cheaply. And if you ever accumulated enough bitcoin like today, that number would be, you know, a few thousand dollars, then you can very cheaply commit, convert that to real Bitcoin. But when bitcoin gets to, you know, the values of millions or tens of millions or hundreds of millions of dollars per Bitcoin, then obviously the average person would never really hold Bitcoin. The, the cost of bitcoin transactions will go incredibly high. And you're never going to spend Bitcoin 3/4 or 90% of your Bitcoin just to have an honest to goodness bitcoin value. Or maybe you will, who knows, but that's unlikely.

Paul, 01:17:29

So yeah, so I think that Bitcoin will largely be held in second layers, but all those second layers are auditable. That is a massively improvement over gold because gold eventually always fails historically because people want paper representations of gold and they have to, because you know what is. Again, go Back to the $20 problem. You can't. Once gold is worth as much as it would be as a world currency, the amount of gold just can't be shaved off use directly. So, you're going to use paper. Well, once you use paper, that paper can get lost and that paper can be printed beyond how much gold somebody's holding. You know, some entity like a bank or something is holding and eventually it gets devalued. There's too much paper out there. The paper runs at the bank and then there's a bank run.

Paul, 01:18:33

Bitcoin can't keep balances in full view of everyone. Everyone can audit their bank and those problems can be avoided. So, I believe bitcoin gets there, accumulate, handles the data and all of the other blockchains have various niches and uses and they'll, they will all make the world a better place. All built off this foundation of bitcoin.

Michael, 01:19:03

So I guess you could say you envision a multi chain future and even cross chain.

Paul, 01:19:10

Oh, there's no doubt you'll have a multi chain future. Yeah, there's a multi chain future and there's a cross-chain future. Absolutely. But I'm saying that from a historical perspective, I mean, how many currencies do we have? We don't have one currency; we have a bunch of currencies. How many different cars do we have? We have not just one manufacturer of cars, we have a bunch of them. How many cell phones do we have? Well, we have a lot of cell phones from a bunch of different sources. There isn't a single technology you can name where we have a one type and one source from one mode of thought that does not exist in technology. So, anyone who thinks that it's bitcoin and Bitcoin only and there will never be any other valid platform, they're just lying to themselves.

Paul, 01:20:06

There's no way you can get humanity behind one solution to a problem. There's not even going to be just one Bitcoin that has value, that's used in transactions. There's going to be, you know, XRP is not going to go away anytime soon and Solana is not going to go away anytime soon and Ethereum is not going to go away anytime soon. And I could go down the list. There’re tons of cryptos that will be here forever. There probably be more cryptos than there ever were currencies because it's just too easy to manage. And that's fine. There's nothing wrong with that. There are different applications for different chains. But Bitcoin will always be the foundation. It has a 60% dominance right now.

Bitcoin will always be the foundation. It has a 60% dominance right now.

Paul, 01:20:56

The real question is Bitcoin in a hold at 60% dominance or will it eventually rise to 90% dominance or will it fall to 30% dominance? I can't tell you. I can't tell you that. And I don't really have a good theory to support. Or maybe I have good theories to support. Either direction is probably the right way to put it. But I don't know which way will win.

Michael, 01:21:24

What projects or initiatives do you anticipate tackling in the future with accumulate or otherwise?

Paul, 01:21:32

Oh, good question. Wow. There are a lot of projects that I would attack, but the holy grail for me would be to completely reorganize how we build applications and systems, particularly how we build platforms. And AI and blockchain present two really interesting possibilities to absolutely upend how programming is done and how large computer systems are built and managed and secured. That's a huge topic. So let me just summarize it. I can imagine a day when you go to the blockchain and you're able to use AI to generate a script to deploy a multi system application across multiple data centers that is profoundly secure and operational almost at a push of a button that tracks bugs as they are detected, deploys self-healing patches to your system. All nearly automated, nearly independent of any person, a single person.

Paul, 01:23:26

Today DevOps is largely automated to a degree with people who have understanding of the technologies that we use to deploy programs and they don't have a strong knowledge of exactly what's going on because a lot of that is automated out. That's just going to go up from where we are now by orders of magnitude. AI has the potential of eliminating programming languages and you're going to need the blockchain to create that security. It goes back to the, what we've already talked about of what the application does. But why have a computer language that interprets code into machine code for deployment in an operating system that is constructed of abstractions to allow people to kind of understand what's going on. Why do all of that when an AI can go straight to code and eliminate all of the attack vectors that are used by hackers?

AI has the potential of eliminating programming languages and you're going to need the blockchain to create that security.

Paul, 01:24:47

Because they can make assumptions about how code is deployed because we deploy it on top of These commonly understood abstractions and platforms when those abstractions and platforms don't actually support the application at all. So, I can see the elimination of operating systems as an actual physical thing you deploy. I can see the elimination of programming code as we know it today. You know, the one thing I cannot see is, I cannot see the elimination of Git. Git is probably the most innovative, impactful platforms that we ever created for code. And it doesn't get the credit it deserves. It. It has revolutionized how we build code and how we organize programming teams. Open source would not be what it is. Even Bitcoin would not be what it is without Git. So, I'll leave it at that.

Paul, 01:26:03

I could spend three hours about the future of computer science. Given the blockchain, given git, given AI. I believe it's completely underestimated how transformative that's going to be.

Michael, 01:26:21

Yeah, we're going to have a follow up conversation before we draw to a close. Is there anything you haven't had the chance to discuss?

Paul, 01:26:31

Oh, I think we've discussed enough. I'm a little exhausted to claim that we, that there are other things, but I think there's a lot we could discuss more. I mean there are governmental aspects to all of this, their regulatory aspects to the blockchain and AI. There are economic upheavals involved here, science. I, I think one of the most innovative things I listened to came from Willy Sohn where he asserted that you could use AIs in a small team to do what the IPCC does with a fraction of the cost money and eliminating the governmental interference aspect of, you know, evaluating the climate and evaluating, you know, the threats to humanity that climate change might have. And there are threats. Right. So, I mean, ask anyone who's drowned. Well, wait, you can't.

Paul, 01:27:49

But yeah, I mean there's just so many things that we could talk about and the blockchain enters into all these things.

Michael, 01:27:59

I'll go ahead and include external links to you and accumulate network in the show notes. But anyway, Paul, thank you so much for taking time out of your day to discuss your contributions to so many different domains in the industry and your insight into all of it. And I definitely want to speak to you again in the future.

Paul, 01:28:20

Well, thank you Michael and I look forward to talking to you. This was a great opportunity and I hope everybody got something from it. Thanks.

Discussion